Recently BUG had a great presentation by Jonathan Linowes of Parkerhill XR Studio on the new multi-platform XR framework that is now in Unity 2019.3. This is a replacement for the existing legacy XR integrations that were built into the engine in recent years.

If you have been doing any VR development since the DK1 you have likely been through the ups and downs of Unity VR integrations which have been quite the ride. There have been custom plugins for individual platforms to in engine integrations and then the constant battle between Unity versions/fixes and all the prefabs, tools, etc. going on top of the integration for each platform. It has been a bit of a nightmare over the years and very frustrating trying to maintain a project while being forced to upgrade Unity constantly to fix VR bugs.

It is exciting to finally see Unity making progress on a cross platform attempt to both abstract XR and decouple implementations from the engine. The closest thing I have found to cross platform VR which isn’t really cross platform but cross device is Valve’s Steam VR combined with the Interaction System which is a port of the mechanics used in the Lab demos. This combination provides a basic foundation for building a VR app that works on Vive, Oculus, and WMR etc. This includes all the standard interaction techniques found in those original Labs demos as well as things like hand and controller models with proper grasping poses, tooltips, and haptics for them and so on.

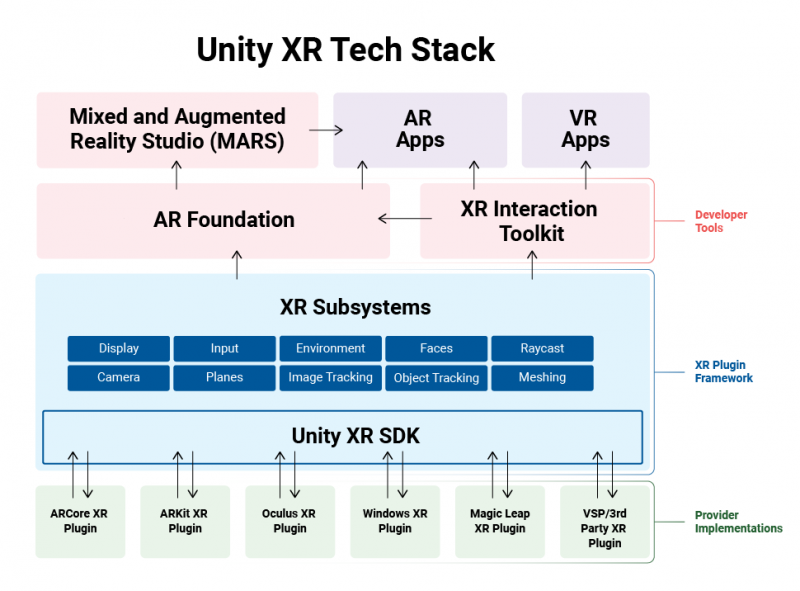

My hope for Unity’s new XR framework is that it would be the equivalent to this but broader covering different platforms/devices and also having abstractions that cross both VR and AR – especially headset based AR like Magic Leap and Hololens. It seems Unity is making some progress but there are still areas that are missing.

Jonathan’s presentation has a variety of links covering the new XR framework. These are the main links into the Unity docs and the forums.

As with all the previous shifts in Unity VR integrations this one comes with some pain and uncertainty. For anyone in the middle of projects it can be quite frustrating. The existing legacy integrations will continue to work in 2019.3 but there is always the concern of what will be updated and maintained throughout 2019 LTS. Hopefully this will remain stable so it can be a transition period until there is complete support for the new XR Plugin framework and the XR Interaction Toolkit can be further developed and refined.

For projects i am working on I have stayed away from the new framework since Valve did not support it yet via OpenVR. I am excited that they finally have some initial support however it appears they can’t work through the new Unity XR Input system. I also wonder how hand/controller models along with skeletons with poses and grab animations fit into the Unity XR Plugins and XR Interaction Toolkit. I have not seen any examples handling this across platforms and devices.

I guess there will always be the issue of custom capabilities that require integration with each platform. Where does a cross platform solution end and per platform solutions begin and how do we manage working against those as developers? What about things like avatars? There will likely never be write once run everywhere, but how close can we get? Hopefully the answers to these questions will come out over the Unity 2020 tech releases.